New virtual laboratory for merging neutron stars

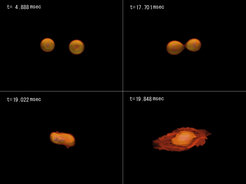

For the first time, a high-performance computer will make it possible to simulate gravitational waves, magnetic fields and neutrino physics of neutron stars simultaneously.

The Computational Relativistic Astrophysics division at the Max Planck Institute for Gravitational Physics (Albert Einstein Institute/AEI) in Potsdam has recently put into operation a 11,600 CPU core computer cluster. The new high-performance cluster called Sakura is located at the Max Planck Computing and Data Facility (MPCDF) in Garching and will be used for numerical-relativistic simulations of powerful astrophysical events. When neutron stars are born in core-collapse supernovae or merge with each other aeons later, huge amounts of electromagnetic waves, neutrinos, and gravitational waves are emitted. The underlying astrophysical processes are not well understood and require solving highly complex, nonlinear, partial differential equations. With Sakura, the scientists will perform physically accurate and high-resolution simulations to significantly improve our understanding of the binary neutron star merger process and the formation of black holes.

The Computational Relativistic Astrophysics division at the AEI focuses on numerical-relativistic simulations of astrophysical events that generate both gravitational waves and electromagnetic radiation by solving Einstein’s equations and matter equations of general relativity on high-performance computers. These simulations play a crucial role in predicting accurate gravitational waveforms for the search in the detector data and for exploring bright high-energy phenomena such as gamma-ray bursts and kilonovae. By using more powerful computers, the scientists can take into account more complicated physics required to understand the astrophysical phenomena. One of the scientists’ ambitious goals is to perform a physically accurate and high-resolution simulation to understand how binary neutron stars merge.

“High-performance computer clusters are our virtual laboratories,” says Masaru Shibata, director of the Computational Relativistic Astrophysics division. “We cannot create neutron stars in a lab, let them merge and monitor what happens. But we can predict what will occur during the coalescence of two neutron stars by taking into account all the important processes and accurately solving the corresponding equations that describe their behaviour. These calculations require a tremendous amount of computing power and often last several months even on very large computers. With Sakura we now have 11.600 CPU cores with 0.92 petaFLOP/s for these numerical simulations at our disposal.”

In previous calculations, the scientists were not able to take into account both the effects of magnetic fields and neutrino physics in one simulation. Masaru Shibata explains: “Besides the fact that the code is still under development, the computational resources play a crucial role. With the new large computer, we think it possible to perform a simulation taking into account magnetic fields and neutrino physics together and get the full picture of neutron star merger physics.”

Besides the new high-performance computer cluster Sakura (“cherry blossom” in Japanese) in Garching, the division runs two smaller compute servers at the AEI in Potsdam: “Yamazaki” (the Japanese word for “mountains”) and “Tani” (which means “valley” in Japanese). “We run small jobs on small computers,” explains Masaru Shibata. “We use our in-house computer power for developing the compute codes and for test runs.” The local infrastructure is also needed for data analysis of the simulations performed at the Garching computing center.

Technical specifications

Sakura in Garching is a part of the MPCDF Computing Center infrastructure and integrated in a fast Omnipath-100 Network and 10Gb Ethernet connections. It consists of head nodes with Intel Xeon Silver 10 core Processors and 192GB to 384GB main memory as well as compute nodes with Intel Xeon Gold 6148 CPUs.

The Yamazaki compute servers in Potsdam are 13 standalone nodes with Intel Xeon Gold QuadCore Processors (18 Cores per Processor, 4 Processors per Server) and 192 to 256 GB main memory.

For storing, working and analysing smaller parts of the huge simulation outputs from the Garching cluster (60 Terabytes every 3 month) a 500 TB storage called Tani (2 JBODS with 60 Disks, each 10 TB in a Raid-1 redundancy) is used at the AEI in Potsdam.